Great Expectations (GX) is a data quality web application that helps businesses create flexible rules and apply their industry expertise as Expectations (tests) for their data. Initially started out as a open source tool, GX quickly grew to become one of the fastest growing tool used by data practitioners.

Impact: Improved onboarding clarity and informed a strategic product direction.

Product Designer, UX Researcher & Designer, Visual Designer

Data is used everywhere in the world, but have you ever actually seen what data looks like? Excel sheets, tables, schemas, nulls, security, and so much more. Great Expectations (GX) allows for users to ingest data onto the GX Cloud app and apply rules ("Expectations") to ensure that the data is as they expected.

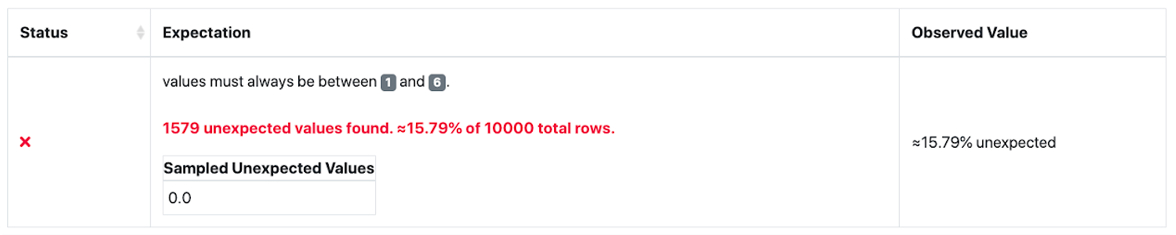

Great Expectations users that enjoyed the flexibility of GX OSS generated "Data Docs". Users found that the results GX were providing based on their defined tests were extremely helpful but lacked:

Our research was provided by interviewing multiple companies and users to; show off GX capabilities, understand user needs & pain points, and moments of delight while presenting GX Cloud. Interviews consisted of both existing users of GX OSS and new users that are just starting out on their data quality journey.

We analyzed the feedback from interviews and used User Journey Mapping to offer insight on the various paths users will take to reach our value moment. Our metric to track success was to determine how many users were able to sign up and successfully create and apply Expectations and run them against the data.

We identified groups of needs that users expressed interest in. One of the groupings was a desire to have better visual representation of their data to help better understand the data, offer better reporting, and ease of use when passing off the data to various departments within their organization.

We needed to understand the business impact of offering this feature. Due to the sensitive nature of data, we were limited in the following:

Our immediate goal was to understand at what points in the user journey caused churn rates to increase and drop off. Users feedback was a lack of navigation and clarity around the many datasets that each organization needed to be put under test. The Product team determined that users will have many datasets under tests, that needed to test at different cadences.

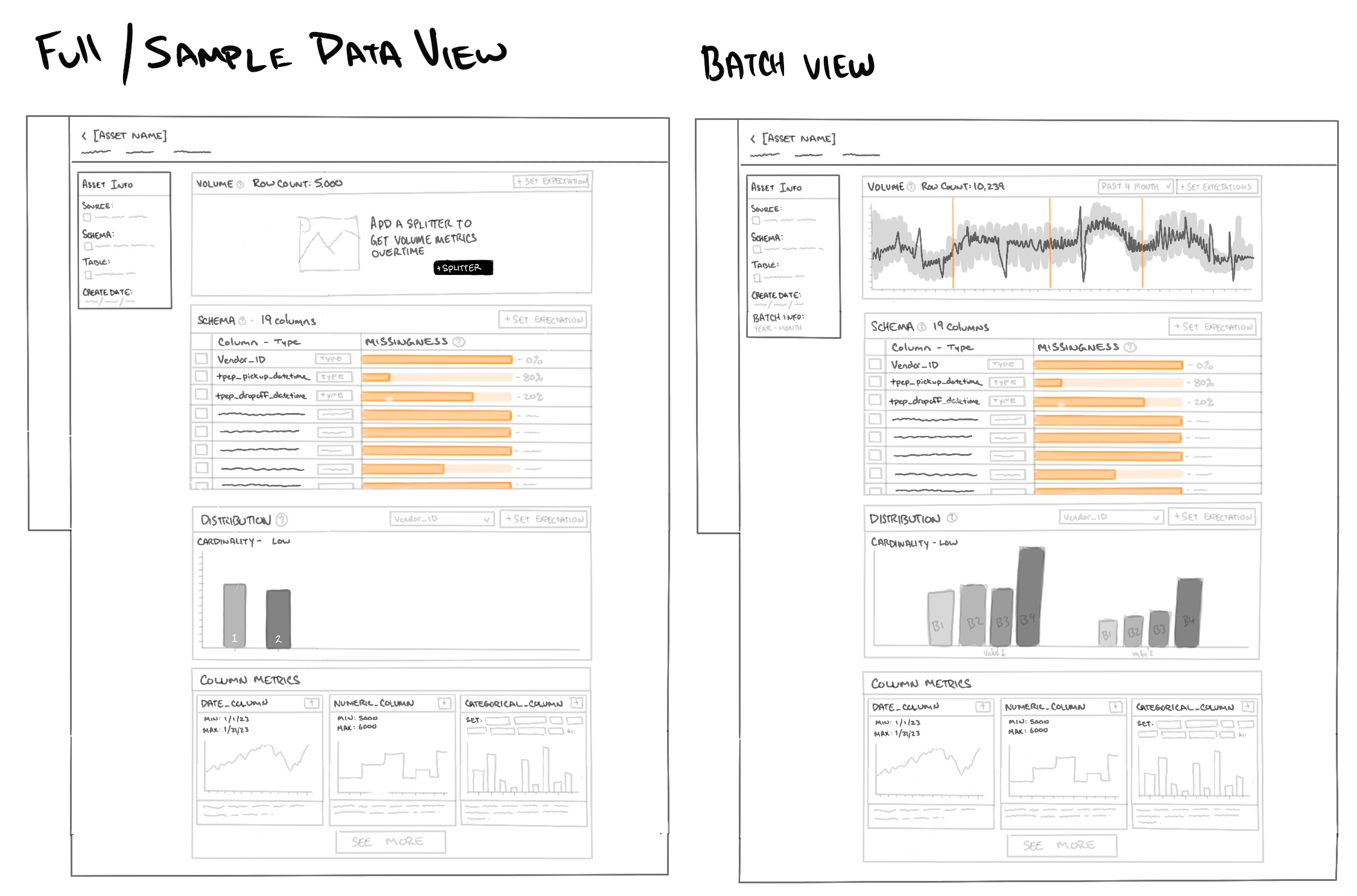

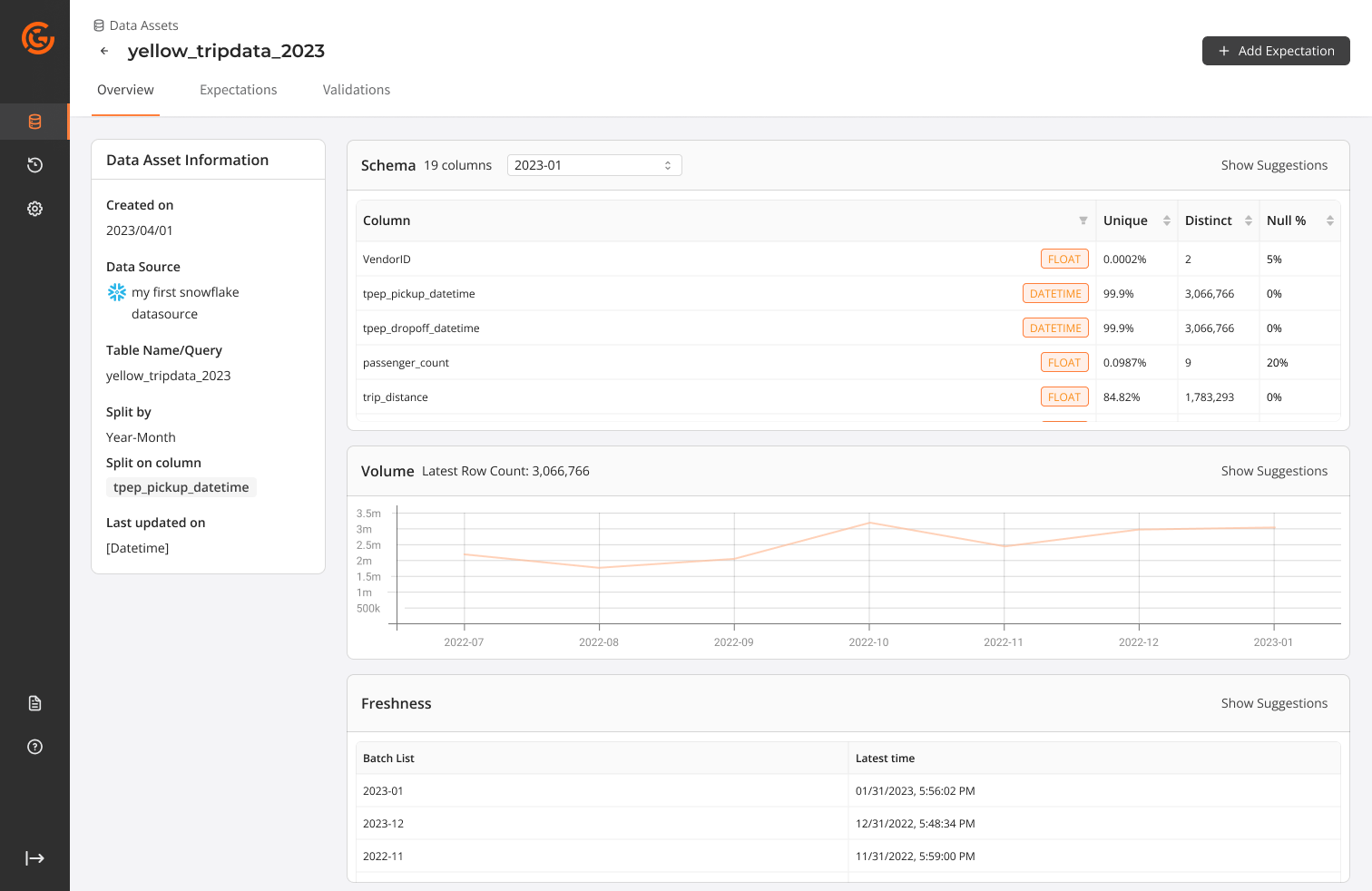

We then determined to create a dashboard view into the data and have an understandable snapshot view of the data that was added onto GX Cloud. Over 65% of users interviewed mentioned a desire to have a view similar to our Data Docs or view of all data to better grasp the overall status of the many datasets that needed testing.

We need to first understand where in the user flow would be the best and ideal place to populate a visual dashboard. Using our existing User Journey Map and came to the conclusion the most ideal place was after users had connected to a datasource. GX Cloud would then quickly process the data and users would see a data dashboard to be able to get a snapshot view of their data in its current state.

Our team decision was to make a lightweight dashboard, we opted to offer simplistic visuals like tables, graphs that surfaced the same information users loved seeing within OSS Data Docs.

Designing the prototype was quick and simple, using our Ant Design System for Figma we were able to quickly pull together a simple prototype using existing components. We used out of the box components instead of custom components as they were easier to implement. A few components needed to be "detached" to meet some of our needs.

After a 2 sessions of discussion and iterations we felt that it was ready to take back to our customers for feedback. We focused on 3 main topics.

Our Product team reviewed the set of designs with customers and presented the prototype to 5 companies to see if the data dashboard had addressed their needs. Since we were returning to the users we had spoken with previously we did not create a new scenario for users but opted to gain better understanding using the following tasks. Interviews were led by a Project manager, 2 Engineers and a Product designer.

After successful connection to users data:

∙ Does the view give you insight into the current dataset?

∙ What are the next steps you would take?

∙ Do the suggested Expectations meet your needs?

Overall the feedback from users were generally positive. Visual aspect was helpful, but new pain points and needs were surfaced. From the interviews we extracted the following:

Users that were familiar with GX OSS were able to access a feature called profiling. Users were able to apply a larger amount of Expectations to the whole table, but the issue was that it provided too many various base Expectations and created a large amount of clutter and unnecessary Expectations.

We learned a lot from the feedback users had given us. Over 50% of users expressed interest in having this view but during our discussion uncovered a more pressing issue. Users wanted to set up basic tests that covered their needs and needed an ability to repeat this process across a large number of datasets. Overall we determined that this work did not address most urgent needs. This was ultimately shelved as future work.

We then pivoted to allowing users to leverage AI to analyze users data and then recommending basic Expectations to quickly get set up and running. We worked on allowing users to simply select from a list of data quality issues that we have identified and allowing users to select which Expectations they would like GX Cloud app to created as a foundation and then allowing users to refine and tune to their needs.

This feature release, even though quite small, successfully improved our completion rate by 30% and reduced drop offs by 46%. We offered users an option to select automatic profiling of their data and applied 2-5 Expectations that would be generated to give users a foundation to continue on their data quality journey.

You can press "Z" to change the scale of the prototype.